When does reliance on automation become over-reliance?

With more technology than ever before available to pilots to assist in operational decision-making, Mike Biasatti considers whether an overreliance on automation poses a clear and present danger to the safety of air medical operations

Depending on where you are in your aviation career, you may never have experienced the joy of leading and lagging magnetic compass turns; undershot north, or overshot south depending on the magnetic variation of your location during training. For my instrument check ride, I was tasked with holding at an intersection comprised of two VOR radials in an aircraft that had one VOR. I still have my E6B Flight Computer, though younger readers might be dismayed when they see one and find the use of the word ‘computer’ rather deceiving. In this day and age, of course, there’s an app for that. I remember in the early 1990s having saved up the $59.99 Sporty’s charged for a flight computer of sorts (plus shipping obviously, no Amazon Prime back then!). It looked like a modern calculator, but would prompt you for information and spit out true airspeeds, fuel burn, wind correction angles, time en route etc. Walking in for my commercial check ride, the examiner told me to remove the batteries. “Oh look,” he said, “your batteries failed, pull out your whiz wheel.” (An affectionate name for the aforementioned E6B). Back then, automation for pilots did not exist outside of commercial airline cockpits.

Today’s computers process flight plans in seconds, where once a 30-minute lead time was necessary. Weather briefings that once required several prompts and a 10-minute hold time on a phone that was hardwired to a wall are now completed with the touch of a finger on a device carried in your pocket, and navigation that required both sides of your E6B ‘Whiz Wheel’ and a pencil are now completed in no more time than it takes to import the departure and destination. The time-saving benefits of such technology are undeniable. But, can our reliance on automation become an over-reliance? Are flight students being first taught to manually determine and critically think through the process and derive the appropriate information? Learning is an active process, and to be a good pilot I think you need to know what to do when automated systems fail and how to plan a flight when certain tools aren’t available.

As an admitted aviation nerd, one of my guilty pleasures is watching shows that examine aviation accidents. Shows like Why Planes Crash look at mostly commercial airliner incidents, taking you through animated re-enactments and sharing probable cause findings. One episode that particularly struck me was the investigation of the Air France 447 accident that occurred on 1 June 2009. The Airbus 330-203 departed Rio de Janeiro en route to Paris and crashed in the Atlantic Ocean, killing all 228 passengers and crew. The flight was crewed by a captain and two first officers (FO). With three pilots onboard, each would be allowed a rest period since the flight exceeded the 10 hours permitted before a pilot must have a rest period. At 02:01:46 hrs, the captain left the cockpit for his rest period. At 02:10:05 hrs, less than nine minutes after the captain left, the autopilot disengaged, likely as a result of blocked pitot tubes due to the aircraft encountering icing. The FO took over manual control of the aircraft. Due to turbulence, the aircraft began to roll and the FO applied overcorrecting inputs and at the same time put the airplane into a 7,000 FPM rate of climb, eventually reaching and then exceeding the airplane’s maximum altitude of 35,000’ (FL 350). A consequence of the autopilot failure was that the aircraft transitioned from normal law to alternate law (computer software that transforms the movement of the joystick made by the pilot into movements of the aircraft control surfaces). The aircraft reached an angle of attack of 40 degrees and began descending at a rapid rate. The engines were producing 100 per cent N1. In normal mode, the aircraft is designed to not allow excessive pilot inputs, but since the computer was offline as it was not receiving airspeed information and now operating under alternate law, many of the redundant safety features did not operate. At one point, the second FO took the controls to lower the nose and, as the pitot tubes were beginning to register airspeed again, the computer recognized the stall condition and began to alarm such. At that stage, the first FO overrode the second FO and re-entered a climb angle putting the aircraft back into a stall, reverting the aircraft back into alternate law and as a result turning off the stall warning.

The Flight Data Recorder stopped at 02:14:28 hrs, at which time the aircraft’s ground speed was 107 knots and it was descending at 10,912 FPM. During the descent, the aircraft had turned more than 180 degrees and in the three-minutes-and-30-second descent from 38,000 feet, the aircraft remained in a stall.

If this can happen to a professional flight crew in an advanced airplane, I dare say it can happen to anyone.

Automation Dependency has commonly been described as a situation in which pilots who routinely fly aircraft with automated systems are only fully confident in their ability to control the trajectory of their aircraft when using the full functionality of such systems. Such a lack of confidence usually stems from a combination of inadequate knowledge of the automated systems themselves unless all are employed and a lack of manual flying and aircraft management competence.

In August of 2009, an experienced air medical helicopter pilot flying to pick up a patient flew into the water half a mile east of North Captiva Island, Florida, while on a visual approach. When the helicopter was approximately three minutes from landing, the pilot selected ‘500 feet’ using the autopilot and the helicopter initiated a descent to that altitude. She continued toward the airfield and made a final transmission to the flight department that she was ‘one minute out’. The pilot could not remember the exact sequence of the final 500-foot descent; however, at some point she remembered the medical crew commenting they ‘couldn’t see anything’. She responded that the flight to Captiva is usually very dark over the water and there’s ‘never anything to see’. She remembered turning on the searchlight and, shortly after, impacting the water. The accident helicopter was equipped with an automatic flight control system (AFCS). The AFCS consisted, in part, of two dual electronic modules (autopilot modules – APMs), which acquire helicopter angles and rates, compute AFCS control laws (basic stabilization and upper modes functions) and transmit them to the actuators. Another component of the AFCS is the self-monitored duplex series actuators of the smart electro-mechanical (SEMA) type for pitch and roll axes. Simplex SEMA is used for the directional axis.

On 17 December 2009, a simulator evaluation was conducted at the Eurocopter facility in Grand Prairie, Texas, under the supervision of the National Transportation Safety Board (NTSB). The accident flight was recreated in an EC145 simulator and four possible scenarios for the accident were identified. The fourth scenario (which the NTSB deemed most likely) was that the pilot selected altitude acquire (ALT.A) and set it to 500 feet, which is what she reported. As commanded by ALT.A the aircraft reached 500 feet, but the power setting (collective pitch), which must be manually controlled by the pilot, was not enough to maintain altitude at 60 kts, the aircraft’s VMIN (minimum selectable) autopilot airspeed. The aircraft would then have descended if the pilot did not add power (increase collective pitch) until the aircraft finally descended to the water.

It’s possible that distractions, coupled with night flight over water, contributed to a loss of peripheral vision and depth perception, resulting in impact with the water, but also possible is reliance on the level-off feature to capture at 500’. In this instance, all successfully eggressed and survived, being rescued shortly after impact.

Now in 2020, how will the state of the commercial aviation industry and growing pilot shortage affect the initial and recurrent training time afforded to growing pilot classes? Boeing has projected that aviation will need 790,000 new pilots by 2037 to meet growing demand, with 96,000 pilots needed to support the business aviation sector. Airbus estimated demand at 450,000 pilots by 2035. With the demand for new pilots growing at a pace that current training programs cannot meet, and the lure of relying on automation beyond the initial training phase, who in the industry will demand core manual flying skills be maintained? There’s an old joke of sorts told around pilot lounges about the future of airline pilot crews. The story suggests that the future airline crew will consist of one pilot and one dog. The pilot is there to feed the dog; the dog is there to bite the pilot if he tries to touch anything.

In February 2009, a total of 50 people died, including the 49 passengers and crew onboard and the resident of the house it struck, when a Bombardier DHC8-402 Q400 entered an aerodynamic stall and crashed on approach to Buffalo Niagara International Airport. Cited by the NTSB in its Probable Cause Finding was: “The captain’s inappropriate response to the activation of the stick shaker, which led to an aerodynamic stall from which the airplane did not recover. After the captain reacted inappropriately to the stick shaker stall warning, the stick pusher activated. As designed, it pushed the nose down when it sensed a stall was imminent, but the captain again reacted improperly and overrode that additional safety device by pulling back again on the control column, causing the plane to stall and crash.” On 11 May 2009, information was released about the captain’s training record. The Wall Street Journal reported that the captain had failed five ‘check rides’, or hands-on tests, conducted in a cockpit or a simulator, before the crash.

Standard Operating Procedures (SOPs) are understandably oriented towards maximum use of automation in the interests of efficiency as well as safety. However, they must be flexible enough to allow pilots to elect to fly without automation or with partial automation in order to maintain their competence between recurrent simulator training sessions.

On 24 November 2001, Crossair Flight 3597 was scheduled to fly from Germany’s Berlin Tegel Airport to Zürich Airport in Switzerland. It crashed into a wooded range of hills near Bassersdorf and caught fire, killing 24 of the 33 people on board. The report revealed that the pilot had failed to perform correct navigation and landing procedures on previous occasions, but no action had been taken to remove him from transporting passengers. The pilot was allowed to fly passengers (reportedly due to a shortage of qualified Crossair pilots), despite his actions that suggested his overall deficiencies as a line pilot. These included a near-miss incident on final approach to Lugano Airport where he came within 300 ft of colliding with the shore of a lake during his final descent and a navigational error during a sightseeing tour over the Alps that took the flight far off its course to Sion, Switzerland. In this particular incident, the pilot missed his approach into Sion and circled over what he thought was Sion’s airport for several minutes before passengers spotted road signs in Italian; the navigation error had taken them over the St Bernard Pass through the Alps, and the airport they had been circling was in fact located near Aosta, Italy. In this accident, in my opinion, the pilot’s inability to safely manually fly the aircraft was predictable based on his background; however, he likely relied on automation to perform his duties for 95 per cent of his flying, and the other five per cent of the time, he wasn’t presented with a set of challenging flight conditions that tested his ability to manually land the aircraft safely until this particular flight, where those skills were desperately needed. As an aspiring certified flight instructor, I remember studying the seven Laws of Learning. Thorndike’s law of exercise has two parts: the law of use and the law of disuse. Law of use: the more often an association is used the stronger it becomes. Law of disuse: the longer an association is unused, the weaker it becomes. Aviation efficiency and safety are greatly enhanced by modern automation and technology, but there is a lot to be said for good old stick and rudder skills.

In an industry experiencing rapid growth, charged with ensuring the safety of the traveling public in a highly competitive, strictly regulated industry that often operates on very tight margins, how can aviation departments balance their already strained training and staffing needs while avoiding the rare but dangerous practice of automation overreliance and, furthermore, how can they maintain a rapidly growing pilot workforce with sufficient manual flying skills to maintain precise aircraft control in the event of an automation failure?

In 2014, Airbus announced that it had developed a training plan that allotted the first training session to manual flying in order for their pilots to learn how to control the aircraft prior to the introduction of automation; changes that were prompted in part by the recognition that manual flying skills were being eroded over time. Industry experts recognize that using hand-flying skills is a best practice for pilots to maintain manual flying proficiency.

In its January 2016 report, Enhanced FAA oversight could reduce hazards associated with increased use of flight deck automation, the Office of Inspector General (IG) Audit noted: “Advances in aircraft automation have significantly contributed to safety and changed the way airline pilots perform their duties – from manually flying the aircraft to spending a majority of their time monitoring flight deck systems. While airlines have long used automation safely to improve efficiency and reduce pilot workload, several recent accidents, including the July 2013 crash of Asiana Airlines flight 214, have shown that pilots who typically fly with automation can make errors when confronted with an unexpected event or transitioning to manual flying. As a result, reliance on automation is a growing concern among industry experts, who have also questioned whether pilots are provided enough training and experience to maintain manual flying proficiency. The National Transportation Safety Board determined that the crew did not appropriately understand the aircraft’s automation systems, which contributed to the crash that killed three people and injured 187.”

The IG report further noted: “The FAA has established certain requirements governing the use of flight deck automation during commercial operations. Concerns about the effects of automation are not new. In fact, the FAA reported on the interface of flight crews and aircraft automation in 1996 and again in November 2013.”

Modern aircraft are increasingly reliant on automation for safe and efficient operation. However, automation also has the potential to cause significant incidents when misunderstood or mishandled. Furthermore, automation may result in an aircraft developing an undesirable state from which it is difficult or impossible to recover using traditional hand flying techniques.

The 2013 report from the Flight Deck Automation Working Group identified vulnerabilities in pilots’ manual flying skills, awareness of aircraft speed and altitude, and reliance on automation, among other findings. “Maintaining the safety of the National Airspace System depends on ensuring pilots have the skills to fly their aircraft under all conditions. Relying too heavily on automation systems may hinder a pilot’s ability to manually fly the aircraft during unexpected events,” concluded the report.

Finally, the IG noted: “While the FAA has taken steps to emphasize the importance of pilots’ manual flying and monitoring skills, the Agency can and should do more to ensure that air carriers are sufficiently training their pilots on these skills. In particular, the FAA has opportunities to improve its guidance to inspectors for evaluating both air carrier policies and training programs. These improvements can help ensure that air carriers create and maintain a culture that emphasizes pilots’ authority and manual flying skills.”

Automation has greatly enhanced operational safety and efficiency in aviation, but pilots must endeavor to master their equipment, exercise their opportunities to hand-fly legs of a flight where that is allowed, strive to keep their skills sharp, seek assistance in learning new equipment if needed and know the differences between different versions of the same airframe. Flight training facilities and internal training departments need to enforce the importance of manual flying skills during initial and recurrent training in addition to the importance of fully understanding the use of automated systems.

You’ve probably seen this message on a plaque at an FBO or pilot lounge somewhere credited to Captain A. G. Lamplugh, a British pilot from the early days of aviation. It reads: “Aviation in itself is not inherently dangerous. But to an even greater degree than the sea, it is terribly unforgiving of any carelessness, incapacity or neglect.” Add to that list automation; while certainly not inherently dangerous, it certainly can be if relied upon in the absence of maintaining core piloting skills and an expertise of automated systems in the event those systems fail. Even redundant systems go offline, requiring the pilot to take the controls while systems reset. Learn it. Know it. Live it.

REFERENCES

https://app.ntsb.gov/pdfgenerator/ReportGeneratorFile.ashx?EventID=2009…

7-15-2012

The Influence of Automation on Aviation Accident and Fatality Rates: 2000-2010 Nicholas A. Koeppen Embry-Riddle Aeronautical University

https://www.skybrary.aero/index.php/Cockpit_Automation_-_Advantages_and…

https://www.forbes.com/sites/marisagarcia/2018/07/27/a-perfect-storm-pi…

https://www.oig.dot.gov/sites/default/files/FAA%20Flight%20Decek%20Auto…

https://www.oig.dot.gov/sites/default/files/FAA%20Flight%20Decek%20Auto…

Memorandum December 4, 2015 Federal Aviation Administration Response to Department of Transportation Office of Inspector General (OIG) Draft Report: Flight Deck Automation 8

July 2020

Issue

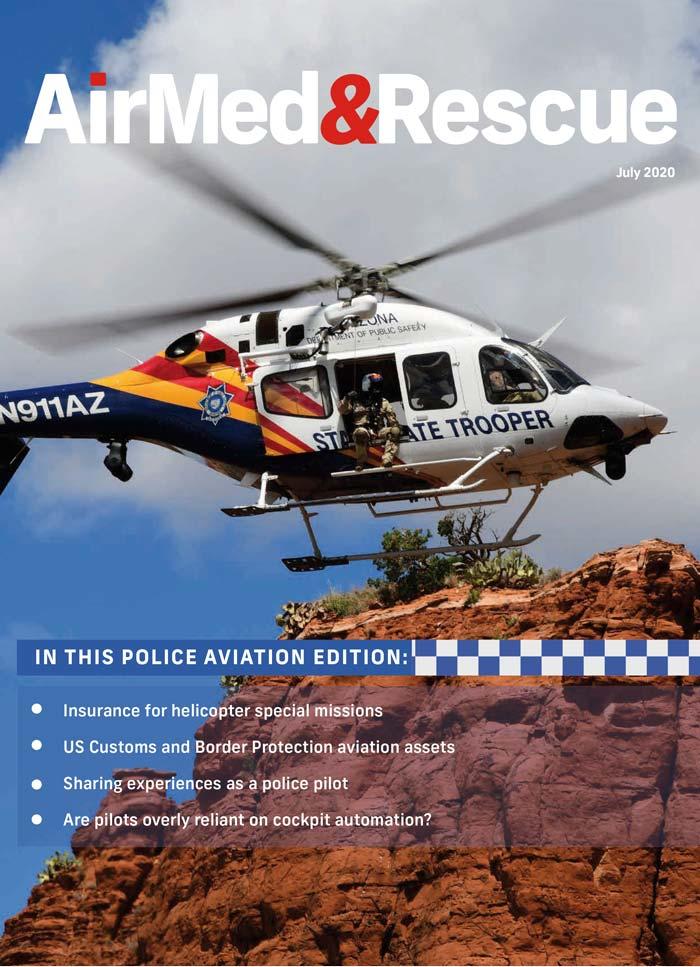

In this Police Aviation issue:

Provider Profile: San Diego Police Department Air Support Unit

Aerial assets of the US Customs and Border Patrol

The cost of special missions insurance

Sharing experiences and CRM as a police aviator

Automatic reactions: When technology isn’t an asset

Multi-agency management: Designing, creating and building a response system

Case Study: AirLec Ambulance

Interview: Cameron Curtis, AAMS President and CEO

Mandy Langfield

Mandy Langfield is Director of Publishing for Voyageur Publishing & Events. She was Editor of AirMed&Rescue from December 2017 until April 2021. Her favourite helicopter is the Chinook, having grown up near an RAF training ground!